Head-Up Displays (HUD), Helmet- or Head-Mounted Displays (HMDs) as well as see-through gun sights have been extensively investigated during the past decades for military applications by major defense contractors. While the first see through HMD optical combiners have been based on conventional reflective/refractive optics, the first and most efficient HUD combiner technologies have been based rather on holographic optics. There are a multitude of HMD optical architectures available today on the market (in both defense and consumer electronics markets), designed along a wide range of different requirements, bearing their respective advantages and shortcomings. We will review the state of the art in this industry.

Bio. For over 20 years, Bernard has made significant scientific contributions as researcher, professor, consultant, advisor, instructor, and author, making major contributions to digital micro-optical systems for consumer electronics, generating IP, and teaching and transferring technological solutions to industry. Many of the world’s largest producers of optics and photonics products have consulted with him on a wide range of optics and photonics technologies including; laser materials processing, optical security, optical telecom/datacom, optical data storage, optical computing, optical motion sensors, pico- projectors, light emitting diode displays, optical gesture sensing, three dimensional remote sensing, digital imaging processing, and biotechnology sensors.

Bio. For over 20 years, Bernard has made significant scientific contributions as researcher, professor, consultant, advisor, instructor, and author, making major contributions to digital micro-optical systems for consumer electronics, generating IP, and teaching and transferring technological solutions to industry. Many of the world’s largest producers of optics and photonics products have consulted with him on a wide range of optics and photonics technologies including; laser materials processing, optical security, optical telecom/datacom, optical data storage, optical computing, optical motion sensors, pico- projectors, light emitting diode displays, optical gesture sensing, three dimensional remote sensing, digital imaging processing, and biotechnology sensors.

Bernard has generated 28 patents of which nine have been granted in the United States, nine have been granted in Europe, two are awaiting filing numbers, and eight are pending. He has published four books, a book chapter, 88 refereed publications and proceedings, and numerous technical publications. He has also been Involved in several European Research Projects in Micro-Optics including the Eureka Flat Optical Technology and Applications (FOTA) Project and the Network for Excellence in Micro-Optics (NEMO) Project.

Bernard is currently working with the Glass group at Google[X] labs in Mountain View, CA.

Advanced information technology has become a key enabler in modern media and entertainment. This comprises the production of animation or live action films, the design of next-generation toys and consumer products, or the creation of richer experiences in theme parks. At Disney Research Zurich, more than 200 researchers and scientists are working at the forefront of innovation in entertainment technology. Our research covers a wide spectrum of different fields, including graphics and animation, human computer interaction, wireless communication, computer vision, materials and design, robotics, and more. In this talk I will demonstrate how innovations in information technology and computational methods developed at Disney Research are serving as platforms for future content creation. I will emphasize the transformative power of 3D printing, digital fabrication, and our increasing ability to make the whole world responsive and interactive.

Advanced information technology has become a key enabler in modern media and entertainment. This comprises the production of animation or live action films, the design of next-generation toys and consumer products, or the creation of richer experiences in theme parks. At Disney Research Zurich, more than 200 researchers and scientists are working at the forefront of innovation in entertainment technology. Our research covers a wide spectrum of different fields, including graphics and animation, human computer interaction, wireless communication, computer vision, materials and design, robotics, and more. In this talk I will demonstrate how innovations in information technology and computational methods developed at Disney Research are serving as platforms for future content creation. I will emphasize the transformative power of 3D printing, digital fabrication, and our increasing ability to make the whole world responsive and interactive.

We present an indoor tracking system based on two wearable inertial measurement units for tracking in home and workplace environments. It applies simultaneous localization and mapping with user actions as landmarks, themselves recognized by the wearable sensors. The approach is thus fully wearable and no pre-deployment effort is required. We identify weaknesses of past approaches and address them by introducing heading drift compensation, stance detection adaptation, and ellipse landmarks. Furthermore, we present an environment-independent parameter set that allows for robust tracking in daily-life scenarios. We assess the method on a dataset with five participants in different home and office environments, totaling 8.7h of daily routines and 2500m of travelled distance. This dataset is publicly released. The main outcome is that our algorithm converges 87% of the time to an accurate approximation of the ground truth map (0.52m mean landmark positioning error) in scenarios where previous approaches fail.

We present an indoor tracking system based on two wearable inertial measurement units for tracking in home and workplace environments. It applies simultaneous localization and mapping with user actions as landmarks, themselves recognized by the wearable sensors. The approach is thus fully wearable and no pre-deployment effort is required. We identify weaknesses of past approaches and address them by introducing heading drift compensation, stance detection adaptation, and ellipse landmarks. Furthermore, we present an environment-independent parameter set that allows for robust tracking in daily-life scenarios. We assess the method on a dataset with five participants in different home and office environments, totaling 8.7h of daily routines and 2500m of travelled distance. This dataset is publicly released. The main outcome is that our algorithm converges 87% of the time to an accurate approximation of the ground truth map (0.52m mean landmark positioning error) in scenarios where previous approaches fail.

In this paper we present a full-scaled real-time monocular SLAM using only a wearable camera. Assuming that the person is walking, the perception of the head oscillatory motion in the initial visual odometry estimate allows for the computation of a dynamic scale factor for static windows of N camera poses. Improving on this method we introduce a consistency test to detect non-walking situations and propose a sliding window approach to reduce the delay in the update of the scaled trajectory. We evaluate our approach experimentally on a unscaled visual odometry estimate obtained with a wearable camera along a path of 886 m. The results show a significant improvement respect to the initial unscaled estimate with a mean relative error of 0.91% over the total trajectory length.

In this paper we present a full-scaled real-time monocular SLAM using only a wearable camera. Assuming that the person is walking, the perception of the head oscillatory motion in the initial visual odometry estimate allows for the computation of a dynamic scale factor for static windows of N camera poses. Improving on this method we introduce a consistency test to detect non-walking situations and propose a sliding window approach to reduce the delay in the update of the scaled trajectory. We evaluate our approach experimentally on a unscaled visual odometry estimate obtained with a wearable camera along a path of 886 m. The results show a significant improvement respect to the initial unscaled estimate with a mean relative error of 0.91% over the total trajectory length. Physical activity monitoring has recently become an important topic in wearable computing, motivated by e.g. healthcare applications. However, new benchmark results show that the difficulty of the complex classification problems exceeds the potential of existing classifiers. Therefore, this paper proposes the ConfAdaBoost.M1 algorithm. The proposed algorithm is a variant of the AdaBoost.M1 that incorporates well established ideas for confidence based boosting. The method is compared to the most commonly used boosting methods using benchmark datasets from the UCI machine learning repository and it is also evaluated on an activity recognition and an intensity estimation problem, including a large number of physical activities from the recently released PAMAP2 dataset. The presented results indicate that the proposed ConfAdaBoost.M1 algorithm significantly improves the classification performance on most of the evaluated datasets, especially for larger and more complex classification tasks.

Physical activity monitoring has recently become an important topic in wearable computing, motivated by e.g. healthcare applications. However, new benchmark results show that the difficulty of the complex classification problems exceeds the potential of existing classifiers. Therefore, this paper proposes the ConfAdaBoost.M1 algorithm. The proposed algorithm is a variant of the AdaBoost.M1 that incorporates well established ideas for confidence based boosting. The method is compared to the most commonly used boosting methods using benchmark datasets from the UCI machine learning repository and it is also evaluated on an activity recognition and an intensity estimation problem, including a large number of physical activities from the recently released PAMAP2 dataset. The presented results indicate that the proposed ConfAdaBoost.M1 algorithm significantly improves the classification performance on most of the evaluated datasets, especially for larger and more complex classification tasks. Personalization of activity recognition has become a topic of interest recently. This paper presents a novel concept, using a set of classifiers as general model, and retraining only the weight of the classifiers with new labeled data from a previously unknown subject. Experiments with different methods based on this concept show that it is a valid approach for personalization. An important benefit of the proposed concept is its low computational cost compared to other approaches, making it also feasible for mobile applications. Moreover, more advanced classifiers (e.g. boosted decision trees) can be combined with the new concept, to achieve good performance even on complex classification tasks. Finally, a new algorithm is introduced based on the proposed concept, which outperforms existing methods, thus further increasing the performance of personalized applications.

Personalization of activity recognition has become a topic of interest recently. This paper presents a novel concept, using a set of classifiers as general model, and retraining only the weight of the classifiers with new labeled data from a previously unknown subject. Experiments with different methods based on this concept show that it is a valid approach for personalization. An important benefit of the proposed concept is its low computational cost compared to other approaches, making it also feasible for mobile applications. Moreover, more advanced classifiers (e.g. boosted decision trees) can be combined with the new concept, to achieve good performance even on complex classification tasks. Finally, a new algorithm is introduced based on the proposed concept, which outperforms existing methods, thus further increasing the performance of personalized applications. Bio. For over 20 years, Bernard has made significant scientific contributions as researcher, professor, consultant, advisor, instructor, and author, making major contributions to digital micro-optical systems for consumer electronics, generating IP, and teaching and transferring technological solutions to industry. Many of the world’s largest producers of optics and photonics products have consulted with him on a wide range of optics and photonics technologies including; laser materials processing, optical security, optical telecom/datacom, optical data storage, optical computing, optical motion sensors, pico- projectors, light emitting diode displays, optical gesture sensing, three dimensional remote sensing, digital imaging processing, and biotechnology sensors.

Bio. For over 20 years, Bernard has made significant scientific contributions as researcher, professor, consultant, advisor, instructor, and author, making major contributions to digital micro-optical systems for consumer electronics, generating IP, and teaching and transferring technological solutions to industry. Many of the world’s largest producers of optics and photonics products have consulted with him on a wide range of optics and photonics technologies including; laser materials processing, optical security, optical telecom/datacom, optical data storage, optical computing, optical motion sensors, pico- projectors, light emitting diode displays, optical gesture sensing, three dimensional remote sensing, digital imaging processing, and biotechnology sensors.

Bio. Founder of Misfit Wearables, makers of highly wearable computing products, including the award-winning Shine, an elegant activity monitor. Founder of AgaMatrix, makers of the world's first iPhone-connected hardware medical device (Red Dot & GOOD Design Awards). Built AgaMatrix from a two-person start-up to shipping 15+ FDA-cleared medical device products, 1B+ biosensors, 3M+ glucose meters for diabetics. Worked at Microsoft Research on machine learning / linguistic technologies. Studied math (BS) at UIUC and linguistics (PhD) under Noam Chomsky at MIT. Knows a number of interesting languages and is a patron of good product design. Believes an era of wearable computing is coming soon where UX design will be geared towards glance-able displays as well as non-visual modalities.

Bio. Founder of Misfit Wearables, makers of highly wearable computing products, including the award-winning Shine, an elegant activity monitor. Founder of AgaMatrix, makers of the world's first iPhone-connected hardware medical device (Red Dot & GOOD Design Awards). Built AgaMatrix from a two-person start-up to shipping 15+ FDA-cleared medical device products, 1B+ biosensors, 3M+ glucose meters for diabetics. Worked at Microsoft Research on machine learning / linguistic technologies. Studied math (BS) at UIUC and linguistics (PhD) under Noam Chomsky at MIT. Knows a number of interesting languages and is a patron of good product design. Believes an era of wearable computing is coming soon where UX design will be geared towards glance-able displays as well as non-visual modalities.  Bio. Martin Wirz received his MSc ETH in 2008 from ETH Zürich. Afterwards, he joined the Wearable Computing Laboratory at ETH Zürich as a research assistant and received his Dr. Sc. ETH (PhD) degree in 2013. In his research, he focused on crowd sensing, context-aware systems and social network analysis. In 2013, he started working as a Product Manager at Sensirion AG, Switzerland where he is responsible for mobile software components which power the temperature and humidity sensor integrated in mobile devices. More Information: http://smart.sensirion.com

Bio. Martin Wirz received his MSc ETH in 2008 from ETH Zürich. Afterwards, he joined the Wearable Computing Laboratory at ETH Zürich as a research assistant and received his Dr. Sc. ETH (PhD) degree in 2013. In his research, he focused on crowd sensing, context-aware systems and social network analysis. In 2013, he started working as a Product Manager at Sensirion AG, Switzerland where he is responsible for mobile software components which power the temperature and humidity sensor integrated in mobile devices. More Information: http://smart.sensirion.com An eartip made of conductive rubber providing bio-potential electrodes is proposed for a daily-use earphone-based eye gesture input interface. Several prototypes, each with three electrodes to capture Electrooculogram (EOG), are implemented on earphones and examined. Experiments with one subject over a 10 day period reveal that all prototypes capture EOG similarly but they differ as regards stability of the baseline and motion artifacts. Another experiment conducted on a simple eye-controlled application with six subjects shows that the proposed prototype minimizes motion artifacts and offers good performance. We conclude that conductive rubber with Ag filler is the most suitable setup for daily-use.

An eartip made of conductive rubber providing bio-potential electrodes is proposed for a daily-use earphone-based eye gesture input interface. Several prototypes, each with three electrodes to capture Electrooculogram (EOG), are implemented on earphones and examined. Experiments with one subject over a 10 day period reveal that all prototypes capture EOG similarly but they differ as regards stability of the baseline and motion artifacts. Another experiment conducted on a simple eye-controlled application with six subjects shows that the proposed prototype minimizes motion artifacts and offers good performance. We conclude that conductive rubber with Ag filler is the most suitable setup for daily-use. This paper presents the design and implementation of a wearable oral sensory system that recognizes human oral activities, such as chewing, drinking, speaking, and coughing. We conducted an evaluation of this oral sensory system in a laboratory experiment involving 8 participants. The results show 93.8% oral activity recognition accuracy when using a person-dependent classifier and 59.8% accuracy when using a person-independent classifier.

This paper presents the design and implementation of a wearable oral sensory system that recognizes human oral activities, such as chewing, drinking, speaking, and coughing. We conducted an evaluation of this oral sensory system in a laboratory experiment involving 8 participants. The results show 93.8% oral activity recognition accuracy when using a person-dependent classifier and 59.8% accuracy when using a person-independent classifier. This report proposes a thermal media system, ThermOn, which enables users to feel dynamic hot and cold sensations on their body corresponding to the sound of music. Thermal sense plays a significant role in the human recognition of environments and influences human emotions. By employing thermal sense in the music experience, which also greatly affects human emotions, we have successfully created a new medium with an unprecedented emotional experience. With ThermOn, a user feels enhanced excitement and comfort, among other responses. For the initial prototype, headphone-type interfaces were implemented using a Peltier device, which allows users to feel thermal stimuli on their ears. Along with the hardware, a thermal-stimulation model that takes into consideration the characteristics of human thermal perception was designed. The prototype device was verified using two methods: the psychophysical method, which measures the skin potential response and the psychometric method using a Likert-scale questionnaire and open-ended interviews. The experimental results suggest that ThermOn (a) changes the impression of music, (b) provides comfortable feelings, and (c) alters the listener's ability to concentrate on music in the case of a rock song. Moreover, these effects were shown to change based on the methods with which thermal stimuli were added to music (such as temporal correspondence) and on the type of stimuli (warming or cooling). From these results, we have concluded that the ThermOn system has the potential to enhance the emotional experience when listening to music.

This report proposes a thermal media system, ThermOn, which enables users to feel dynamic hot and cold sensations on their body corresponding to the sound of music. Thermal sense plays a significant role in the human recognition of environments and influences human emotions. By employing thermal sense in the music experience, which also greatly affects human emotions, we have successfully created a new medium with an unprecedented emotional experience. With ThermOn, a user feels enhanced excitement and comfort, among other responses. For the initial prototype, headphone-type interfaces were implemented using a Peltier device, which allows users to feel thermal stimuli on their ears. Along with the hardware, a thermal-stimulation model that takes into consideration the characteristics of human thermal perception was designed. The prototype device was verified using two methods: the psychophysical method, which measures the skin potential response and the psychometric method using a Likert-scale questionnaire and open-ended interviews. The experimental results suggest that ThermOn (a) changes the impression of music, (b) provides comfortable feelings, and (c) alters the listener's ability to concentrate on music in the case of a rock song. Moreover, these effects were shown to change based on the methods with which thermal stimuli were added to music (such as temporal correspondence) and on the type of stimuli (warming or cooling). From these results, we have concluded that the ThermOn system has the potential to enhance the emotional experience when listening to music. In this paper we describe a novel method for detecting bends and folds in fabric structures. Bending and folding can be used to detect human joint angles directly, or to detect possible errors in the signals of other joint-movement sensors due to fabric folding. Detection is achieved through measuring changes in the resistance of a complex stitch, formed by an industrial coverstitch machine using an un-insulated conductive yarn, on the surface of the fabric. We evaluate self-intersecting folds which cause short-circuits in the sensor, creating a quasi-binary resistance response, and non-contact bends, which deform the stitch structure and result in a more linear response. Folds and bends created by human movement were measured on the dorsal and lateral knee of both a robotic mannequin and a human. Preliminary results are promising. Both dorsal and lateral stitches showed repeatable characteristics during testing on a mechanical mannequin and a human.

In this paper we describe a novel method for detecting bends and folds in fabric structures. Bending and folding can be used to detect human joint angles directly, or to detect possible errors in the signals of other joint-movement sensors due to fabric folding. Detection is achieved through measuring changes in the resistance of a complex stitch, formed by an industrial coverstitch machine using an un-insulated conductive yarn, on the surface of the fabric. We evaluate self-intersecting folds which cause short-circuits in the sensor, creating a quasi-binary resistance response, and non-contact bends, which deform the stitch structure and result in a more linear response. Folds and bends created by human movement were measured on the dorsal and lateral knee of both a robotic mannequin and a human. Preliminary results are promising. Both dorsal and lateral stitches showed repeatable characteristics during testing on a mechanical mannequin and a human. Wearable computing technologies attract a great deal of attentions on context-aware systems. They recognize user context by using wearable sensors. Though conventional context-aware systems use accelerometers or magnetic sensors, these sensors need wired/wireless with a storage or a data processor such as PC for data storing/processing. Conventional microphone-based context-recognition methods can capture surrounding context by audio processing but they cannot recognize complex user motions. In this paper, we propose a context recognition method using sound-based gesture recognition. In our system, the user wears a microphone and small speakers, which generate ultrasonic sound, on his/her body. The system recognizes gestures on the basis of the volume of generated sound and the Doppler effect. The former indicates the distance between the neck and wrists, and the later indicates the speed of motions. The speaker just transmits ultrasonic sound, and the recording device, which is an ordinary voice recorder, just records the sound, thus there is no need to communicate with a storage for data storing. Moreover, since we use an ultrasonic sound, our method is robust to different sound environments. Evaluation results confirmed that when there was no environmental sound generated from other people, the recognition rate was 86.6% on average. When there was environmental sound generated from other people, by applying the proposed method in the presence environmental sound from others, the recognition rate was 64.7% while that without our method is 57.3%.

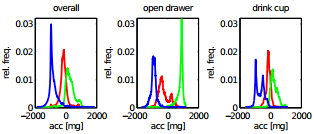

Wearable computing technologies attract a great deal of attentions on context-aware systems. They recognize user context by using wearable sensors. Though conventional context-aware systems use accelerometers or magnetic sensors, these sensors need wired/wireless with a storage or a data processor such as PC for data storing/processing. Conventional microphone-based context-recognition methods can capture surrounding context by audio processing but they cannot recognize complex user motions. In this paper, we propose a context recognition method using sound-based gesture recognition. In our system, the user wears a microphone and small speakers, which generate ultrasonic sound, on his/her body. The system recognizes gestures on the basis of the volume of generated sound and the Doppler effect. The former indicates the distance between the neck and wrists, and the later indicates the speed of motions. The speaker just transmits ultrasonic sound, and the recording device, which is an ordinary voice recorder, just records the sound, thus there is no need to communicate with a storage for data storing. Moreover, since we use an ultrasonic sound, our method is robust to different sound environments. Evaluation results confirmed that when there was no environmental sound generated from other people, the recognition rate was 86.6% on average. When there was environmental sound generated from other people, by applying the proposed method in the presence environmental sound from others, the recognition rate was 64.7% while that without our method is 57.3%. The majority of activity recognition systems in wearable computing rely on a set of statistical measures, such as means and moments, extracted from short frames of continuous sensor measurements to perform recognition. These features implicitly quantify the distribution of data observed in each frame. However, feature selection remains challenging and labour intensive, rendering a more generic method to quantify distributions in accelerometer data much desired. In this paper we present the ECDF representation, a novel approach to preserve characteristics of arbitrary distributions for feature extraction which is particularly suitable for embedded applications. In extensive experiments on 6 publicly available datasets we demonstrate that it outperforms common approaches to feature extraction across a wide variety of tasks.

The majority of activity recognition systems in wearable computing rely on a set of statistical measures, such as means and moments, extracted from short frames of continuous sensor measurements to perform recognition. These features implicitly quantify the distribution of data observed in each frame. However, feature selection remains challenging and labour intensive, rendering a more generic method to quantify distributions in accelerometer data much desired. In this paper we present the ECDF representation, a novel approach to preserve characteristics of arbitrary distributions for feature extraction which is particularly suitable for embedded applications. In extensive experiments on 6 publicly available datasets we demonstrate that it outperforms common approaches to feature extraction across a wide variety of tasks. Nowadays, users are overwhelmed by the ever growing number of smartphone apps they can choose from. Reliable smartphone app prediction that benefits both users alike and phone system performance is very desirable. However, real-world smartphone app usage behavior is a complex phenomena driven by multiple factors from individual users or more broad user communities. In this paper, we develop an app usage prediction model that leverages three key everyday factors that affect app decisions - (1) intrinsic user app preferences and user historical patterns; (2) user activities and the environment as observed through sensor-based contextual signals; and, (3) the shared aggregate patterns of app behavior that appear in specific user communities. While rapid progress has been made recently in smartphone app prediction, existing prediction models tend to focus on only one of these factors. Furthermore, our approach to prediction is the first work to elevate community similarity to a first-class citizen in app usage prediction modeling. Using a detailed 3-week field trial over 35 people along with the analysis of app usage logs of 4,606 active smartphone users worldwide, we demonstrate that the proposed model can not only make more robust application recommendations, but also drive significant smartphone system optimizations.

Nowadays, users are overwhelmed by the ever growing number of smartphone apps they can choose from. Reliable smartphone app prediction that benefits both users alike and phone system performance is very desirable. However, real-world smartphone app usage behavior is a complex phenomena driven by multiple factors from individual users or more broad user communities. In this paper, we develop an app usage prediction model that leverages three key everyday factors that affect app decisions - (1) intrinsic user app preferences and user historical patterns; (2) user activities and the environment as observed through sensor-based contextual signals; and, (3) the shared aggregate patterns of app behavior that appear in specific user communities. While rapid progress has been made recently in smartphone app prediction, existing prediction models tend to focus on only one of these factors. Furthermore, our approach to prediction is the first work to elevate community similarity to a first-class citizen in app usage prediction modeling. Using a detailed 3-week field trial over 35 people along with the analysis of app usage logs of 4,606 active smartphone users worldwide, we demonstrate that the proposed model can not only make more robust application recommendations, but also drive significant smartphone system optimizations. In this paper, we introduce a wearable partner agent, that makes physical contacts corresponding to the user's clothing, posture, and detected contexts. Physical contacts are generated by combining haptic stimuli and anthropomorphic motions of the agent. The agent performs two types of the behaviors: a) it notifies the user of a message by patting the user's arm and b) it generates emotional expression by strongly enfolding the user's arm. Our experimental results demonstrated that haptic communication from the agent increases the intelligibility of the agent's messages and familiar impressions of the agent.

In this paper, we introduce a wearable partner agent, that makes physical contacts corresponding to the user's clothing, posture, and detected contexts. Physical contacts are generated by combining haptic stimuli and anthropomorphic motions of the agent. The agent performs two types of the behaviors: a) it notifies the user of a message by patting the user's arm and b) it generates emotional expression by strongly enfolding the user's arm. Our experimental results demonstrated that haptic communication from the agent increases the intelligibility of the agent's messages and familiar impressions of the agent. Assistance dogs have improved the lives of thousands of people with disabilities. However, communication between human and canine partners is currently limited. The main goal of the FIDO project is to research fundamental aspects of wearable technologies to support communication between working dogs and their handlers. In this pilot study, the FIDO team investigated on-body interfaces for assistance dogs in the form of electronic textiles and computers integrated into assistance dog vests. We created four different sensors that dogs could activate (based on biting, tugging, and nose gestures) and tested them on-body with three assistance-trained dogs. We were able to demonstrate that it is possible to create wearable sensors that dogs can reliably activate on command.

Assistance dogs have improved the lives of thousands of people with disabilities. However, communication between human and canine partners is currently limited. The main goal of the FIDO project is to research fundamental aspects of wearable technologies to support communication between working dogs and their handlers. In this pilot study, the FIDO team investigated on-body interfaces for assistance dogs in the form of electronic textiles and computers integrated into assistance dog vests. We created four different sensors that dogs could activate (based on biting, tugging, and nose gestures) and tested them on-body with three assistance-trained dogs. We were able to demonstrate that it is possible to create wearable sensors that dogs can reliably activate on command. Wearable technology, specifically e-textiles, offers the potential for interacting with electronic devices in a whole new manner. However, some may find the operation of a system that employs non-traditional on-body interactions uncomfortable to perform in a public setting, which impacts how readily a new form of mobile technology may be received. Thus, it is important for interaction designers to take into consideration the implications of on-body gesture interactions when designing wearable interfaces. In this study, we explore the third-party perceptions of a user's interactions with a wearable e-textile interface. This two-prong evaluation examines the societal perceptions of a user interacting with the textile interface at different on-body locations, as well as the observer's attitudes toward on-body controller placement. We performed the study in the United States and South Korea to gain cultural insights into the perceptions of on-body technology usage.

Wearable technology, specifically e-textiles, offers the potential for interacting with electronic devices in a whole new manner. However, some may find the operation of a system that employs non-traditional on-body interactions uncomfortable to perform in a public setting, which impacts how readily a new form of mobile technology may be received. Thus, it is important for interaction designers to take into consideration the implications of on-body gesture interactions when designing wearable interfaces. In this study, we explore the third-party perceptions of a user's interactions with a wearable e-textile interface. This two-prong evaluation examines the societal perceptions of a user interacting with the textile interface at different on-body locations, as well as the observer's attitudes toward on-body controller placement. We performed the study in the United States and South Korea to gain cultural insights into the perceptions of on-body technology usage. Firefighters work in dangerous and unfamiliar situations under a high degree of time pressure and thus team work is of utmost importance. Relying on trained automatisms, firefighters coordinate their actions implicitly by observing the actions of their team members. Consequently, being out of sight is likely to reduce coordination. The aim of this work is to automatically detect when a firefighter is in sight with other firefighters and to visualize the proximity dynamics of firefighting missions. In our approach, we equip firefighters with smartphones and use the built-in ANT protocol, a low-power communication radio, to scan nearby device fast and efficiently in order to measure proximity to other firefighters. In a second step, we cluster the proximity data to detect moving sub-groups. To evaluate our method, we recorded proximity data of 16 professional firefighting teams performing a real-life training scenario. We manually labeled six randomly selected training sessions, involving 51 firefighters, to obtain 79 minutes of ground truth data. On average, our algorithm assigns each group member to the correct ground truth cluster with 80% accuracy. Considering height information derived from atmospheric pressure signals, increases group assignment accuracy to 95%.

Firefighters work in dangerous and unfamiliar situations under a high degree of time pressure and thus team work is of utmost importance. Relying on trained automatisms, firefighters coordinate their actions implicitly by observing the actions of their team members. Consequently, being out of sight is likely to reduce coordination. The aim of this work is to automatically detect when a firefighter is in sight with other firefighters and to visualize the proximity dynamics of firefighting missions. In our approach, we equip firefighters with smartphones and use the built-in ANT protocol, a low-power communication radio, to scan nearby device fast and efficiently in order to measure proximity to other firefighters. In a second step, we cluster the proximity data to detect moving sub-groups. To evaluate our method, we recorded proximity data of 16 professional firefighting teams performing a real-life training scenario. We manually labeled six randomly selected training sessions, involving 51 firefighters, to obtain 79 minutes of ground truth data. On average, our algorithm assigns each group member to the correct ground truth cluster with 80% accuracy. Considering height information derived from atmospheric pressure signals, increases group assignment accuracy to 95%. This paper presents a method for estimating the 3D shape of an object being observed using wearable gaze tracking. Starting from a sparse environment map generated by a simultaneous localization and mapping algorithm (SLAM), we use the gaze direction positioned in 3D to extract the model of the object under observation. By letting the user look at the object of interest, and without any feedback, the method determines 3D point-of-regards by back-projecting the user's gaze rays into the map. The 3D point-of-regards are then used as seed points for segmenting the object from captured images and the calculated silhouettes are used to estimate the 3D shape of the object. We explore methods to remove outlier gaze points that result from the user saccading to non object points and methods for reducing the error in the shape estimation. Being able to exploit gaze information in this way, enables the user of wearable gaze trackers to be able to do things as complex as object modelling in a hands-free and even feedback-free manner.

This paper presents a method for estimating the 3D shape of an object being observed using wearable gaze tracking. Starting from a sparse environment map generated by a simultaneous localization and mapping algorithm (SLAM), we use the gaze direction positioned in 3D to extract the model of the object under observation. By letting the user look at the object of interest, and without any feedback, the method determines 3D point-of-regards by back-projecting the user's gaze rays into the map. The 3D point-of-regards are then used as seed points for segmenting the object from captured images and the calculated silhouettes are used to estimate the 3D shape of the object. We explore methods to remove outlier gaze points that result from the user saccading to non object points and methods for reducing the error in the shape estimation. Being able to exploit gaze information in this way, enables the user of wearable gaze trackers to be able to do things as complex as object modelling in a hands-free and even feedback-free manner. Reading is a ubiquitous activity that many people even perform in transit, such as while on the bus or while walking. Tracking reading enables us to gain more insights about expertise level and potential knowledge of users towards a reading log tracking and improve knowledge acquisition. As a first step towards this vision, in this work we investigate whether different document types can be automatically detected from visual behaviour recorded using a mobile eye tracker. We present an initial recognition approach that combines special purpose eye movement features as well as machine learning for document type detection. We evaluate our approach in a user study with eight participants and five Japanese document types and achieve a recognition performance of 74% using user-independent training.

Reading is a ubiquitous activity that many people even perform in transit, such as while on the bus or while walking. Tracking reading enables us to gain more insights about expertise level and potential knowledge of users towards a reading log tracking and improve knowledge acquisition. As a first step towards this vision, in this work we investigate whether different document types can be automatically detected from visual behaviour recorded using a mobile eye tracker. We present an initial recognition approach that combines special purpose eye movement features as well as machine learning for document type detection. We evaluate our approach in a user study with eight participants and five Japanese document types and achieve a recognition performance of 74% using user-independent training. We propose an eyeglass-based videophone that enables the wearer to make a video call without holding a phone (that is to say “hands-free”) in the mobile environment. The glasses have 4 (or 6) fish-eye cameras to widely capture the face of the wearer and the images are fused to yield 1 frontal face image. The face image is also combined with the background image captured by a rear-mounted camera; the result is a self-portrait image without holding any camera device at arm’s length. Simulations confirm that 4 fish-eye cameras with 250-degree viewing angles (or 6 cameras with 180-degree viewing angles) can cover 83 % of the frontal face. We fabricate a 6 camera prototype, and confirm the possibility of generating the self-portrait image. This system suits not only hands-free videophones but also other applications like visual life logging and augmented reality use.

We propose an eyeglass-based videophone that enables the wearer to make a video call without holding a phone (that is to say “hands-free”) in the mobile environment. The glasses have 4 (or 6) fish-eye cameras to widely capture the face of the wearer and the images are fused to yield 1 frontal face image. The face image is also combined with the background image captured by a rear-mounted camera; the result is a self-portrait image without holding any camera device at arm’s length. Simulations confirm that 4 fish-eye cameras with 250-degree viewing angles (or 6 cameras with 180-degree viewing angles) can cover 83 % of the frontal face. We fabricate a 6 camera prototype, and confirm the possibility of generating the self-portrait image. This system suits not only hands-free videophones but also other applications like visual life logging and augmented reality use. Google's Glass has captured the world's imagination, with new articles speculating on it almost every day. Yet, why would consumers want a wearable computer in their everyday lives? For the past 20 years, my teams have been creating living laboratories to discover the most compelling reasons. In the process, we have investigated how to create interfaces for technology which are designed to be "there when you need it, gone when you don't." This talk will attempt to articulate the most valuable lessons we have learned, including some design principles for creating "microinteractions" to fit a user's lifestyle.

Google's Glass has captured the world's imagination, with new articles speculating on it almost every day. Yet, why would consumers want a wearable computer in their everyday lives? For the past 20 years, my teams have been creating living laboratories to discover the most compelling reasons. In the process, we have investigated how to create interfaces for technology which are designed to be "there when you need it, gone when you don't." This talk will attempt to articulate the most valuable lessons we have learned, including some design principles for creating "microinteractions" to fit a user's lifestyle.

We investigate the potential of a smartphone to measure a patient’s change in physical activity before and after a surgical pain relief intervention. We show feasibility for our smartphone system providing physical activity from acceleration, barometer and location data to measure the intervention’s outcome. In a single-case study, we monitored a pain patient carrying the smartphone before and after a surgical intervention over 26 days. Results indicate significant changes before and after intervention, particularly in physical activity in the home environment.

We investigate the potential of a smartphone to measure a patient’s change in physical activity before and after a surgical pain relief intervention. We show feasibility for our smartphone system providing physical activity from acceleration, barometer and location data to measure the intervention’s outcome. In a single-case study, we monitored a pain patient carrying the smartphone before and after a surgical intervention over 26 days. Results indicate significant changes before and after intervention, particularly in physical activity in the home environment. This paper presents a technology to design and fabricate nanostructured gas sensors in fabric substrates. Nanostructured gas sensors were fabricated by constructing ZnO nanorods on fabrics including polyester, cotton and polyimide for continuous monitoring of wearer's breath gas that can indicate health status. The developed fabric-based gas sensors demonstrated gas sensing by monitoring electrical resistance change upon exposure of acetone and ethanol gases.

This paper presents a technology to design and fabricate nanostructured gas sensors in fabric substrates. Nanostructured gas sensors were fabricated by constructing ZnO nanorods on fabrics including polyester, cotton and polyimide for continuous monitoring of wearer's breath gas that can indicate health status. The developed fabric-based gas sensors demonstrated gas sensing by monitoring electrical resistance change upon exposure of acetone and ethanol gases. The aim of this study was to develop a reversible contacting through adhesive bonded neodymium magnets. To implement this, suitable magnets and adhesives are chosen by defined requirements and conductive bonds between textile and magnet are optimized. For the latter, three different bonds are produced and tested in terms of achievable conductivity and mechanical strength. It is shown that gold-coated neodymium magnets are most appropriate for such a contact. The reproducible electrical resistances are low with sufficient mechanical strength.

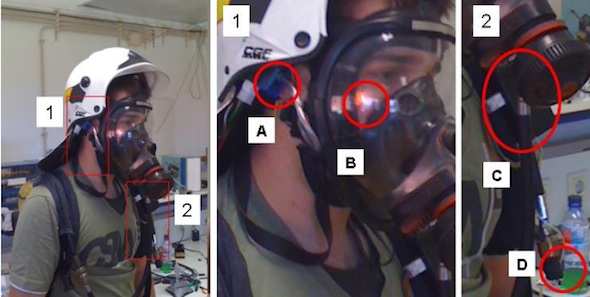

The aim of this study was to develop a reversible contacting through adhesive bonded neodymium magnets. To implement this, suitable magnets and adhesives are chosen by defined requirements and conductive bonds between textile and magnet are optimized. For the latter, three different bonds are produced and tested in terms of achievable conductivity and mechanical strength. It is shown that gold-coated neodymium magnets are most appropriate for such a contact. The reproducible electrical resistances are low with sufficient mechanical strength. In this paper we propose FIREMAN, a low cost system for online monitoring of firefighters ventilation patterns when using Self-Contained Breathing Apparatus (SCBA), based on a specific hardware device attached to SCBA and a Smartphone application. The system implementation allows the detection of relevant ventilation patterns while providing feasible and accurate estimation of SCBA air consumption.

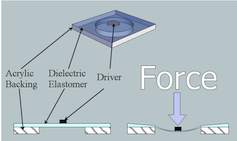

In this paper we propose FIREMAN, a low cost system for online monitoring of firefighters ventilation patterns when using Self-Contained Breathing Apparatus (SCBA), based on a specific hardware device attached to SCBA and a Smartphone application. The system implementation allows the detection of relevant ventilation patterns while providing feasible and accurate estimation of SCBA air consumption. We explore the use of Dielectric Elastomer (DE) micro-generators as a means to scavenge energy from foot-strikes and power wearable systems. While they exhibit large energy densities, DEs must be closely controlled to maximize the energy they transduce. Towards this end, we propose a DE micro-generator array configuration that enhances transduction efficiency, and the use of foot pressure sensors to realize accurate control of the individual DEs. Statistical techniques are applied to customize performance for a user's gait and enable energy-optimized adaptive online control of the system. Simulations based on experimentally collected foot pressure datasets, empirical characterization of DE mechanical behavior and a detailed model of DE electrical behavior show that the proposed system can achieve between 45 and 66mJ per stride.

We explore the use of Dielectric Elastomer (DE) micro-generators as a means to scavenge energy from foot-strikes and power wearable systems. While they exhibit large energy densities, DEs must be closely controlled to maximize the energy they transduce. Towards this end, we propose a DE micro-generator array configuration that enhances transduction efficiency, and the use of foot pressure sensors to realize accurate control of the individual DEs. Statistical techniques are applied to customize performance for a user's gait and enable energy-optimized adaptive online control of the system. Simulations based on experimentally collected foot pressure datasets, empirical characterization of DE mechanical behavior and a detailed model of DE electrical behavior show that the proposed system can achieve between 45 and 66mJ per stride. E-textile practitioners have improvised innovatively with existing off-the shelf electronics to make them textile-compatible. However, there is a need to further the development of soft materials or parts that could replace regular electronics in a circuit. As a starting point, we look at the possibility of creating a repository of specific motifs with different resistance values that can be easily incorporated into e-embroidery projects and used instead of normal resistors. The paper describes our larger objective and gives an overview of the first experiment done to compare the resistance values of a simple pattern embroidered multiple times with conductive yarn to observe its behavior and reliability.

E-textile practitioners have improvised innovatively with existing off-the shelf electronics to make them textile-compatible. However, there is a need to further the development of soft materials or parts that could replace regular electronics in a circuit. As a starting point, we look at the possibility of creating a repository of specific motifs with different resistance values that can be easily incorporated into e-embroidery projects and used instead of normal resistors. The paper describes our larger objective and gives an overview of the first experiment done to compare the resistance values of a simple pattern embroidered multiple times with conductive yarn to observe its behavior and reliability. This work discusses ways of measuring particulate matter with mobile devices. Solutions using a dedicated sensor device are presented along with a novel method of retrofitting a sensor to a camera phone without need for electrical modifications. Instead, the flash and camera of the phone are used as light source and receptor of an optical dust sensor respectively. Experiments to evaluate the accuracy are presented.

This work discusses ways of measuring particulate matter with mobile devices. Solutions using a dedicated sensor device are presented along with a novel method of retrofitting a sensor to a camera phone without need for electrical modifications. Instead, the flash and camera of the phone are used as light source and receptor of an optical dust sensor respectively. Experiments to evaluate the accuracy are presented.  We explore the feasibility of utilizing large, crowd-generated online repositories to construct prior knowledge models for high-level activity recognition. Towards this, we mine the popular location-based social network, Foursquare, for geo-tagged activity reports. Although unstructured and noisy, we are able to extract, categorize and geographically map people's activities, thereby answering the question: what activities are possible where? Through Foursquare text only, we obtain a testing accuracy of 59.2% with 10 activity categories; using additional contextual cues such as venue semantics, we obtain an increased accuracy of 67.4%. By mapping prior odds of activities via geographical coordinates, we directly benefit activity recognition systems built on geo-aware mobile phones.

We explore the feasibility of utilizing large, crowd-generated online repositories to construct prior knowledge models for high-level activity recognition. Towards this, we mine the popular location-based social network, Foursquare, for geo-tagged activity reports. Although unstructured and noisy, we are able to extract, categorize and geographically map people's activities, thereby answering the question: what activities are possible where? Through Foursquare text only, we obtain a testing accuracy of 59.2% with 10 activity categories; using additional contextual cues such as venue semantics, we obtain an increased accuracy of 67.4%. By mapping prior odds of activities via geographical coordinates, we directly benefit activity recognition systems built on geo-aware mobile phones. We explore the wash-ability of conductive materials used in creating traces and touch sensors in wearable electronic textiles. We perform a wash test measuring change in resistivity after each of 10 cycles of washing for conductive traces constructed using two types of conductive thread, conductive ink, and combinations of thread and ink.

We explore the wash-ability of conductive materials used in creating traces and touch sensors in wearable electronic textiles. We perform a wash test measuring change in resistivity after each of 10 cycles of washing for conductive traces constructed using two types of conductive thread, conductive ink, and combinations of thread and ink. In this paper we describe a wristwatch-like device using a 3-axis gyro sensor to determine how a player is strumming the guitar. The device was worn on the right-handed player's right hand to evaluate the strumming action, which is important to play the guitar musically in terms of the timing and the strength of notes. With a newly developed calculation algorithm to specify the timing and the strength of the motion when the guitar string(s) were strummed, beginners and experienced players were clearly distinguished without hearing the sounds. The beginners as well as intermediate-level players showed a fairly large variation of the maximum angular velocity around the upper arm for each strum. Since the developed system reports the evaluation results with a graphical display as well as sound effects in real time, the players may improve their strumming action without playing back the performance.

In this paper we describe a wristwatch-like device using a 3-axis gyro sensor to determine how a player is strumming the guitar. The device was worn on the right-handed player's right hand to evaluate the strumming action, which is important to play the guitar musically in terms of the timing and the strength of notes. With a newly developed calculation algorithm to specify the timing and the strength of the motion when the guitar string(s) were strummed, beginners and experienced players were clearly distinguished without hearing the sounds. The beginners as well as intermediate-level players showed a fairly large variation of the maximum angular velocity around the upper arm for each strum. Since the developed system reports the evaluation results with a graphical display as well as sound effects in real time, the players may improve their strumming action without playing back the performance. Research in dolphin cognition and communication in the wild is still a challenging task for marine biologists. Most problems arise from the uncontrolled nature of field studies and the challenges of building suitable underwater research equipment. We present a novel underwater wearable computer enabling researchers to engage in an audio-based interaction between humans and dolphins. The design requirements are based on a research protocol developed by a team of marine biologists associated with the Wild Dolphin Project.

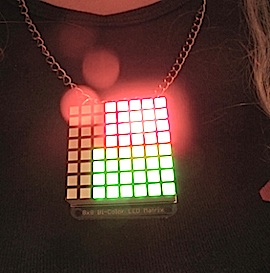

Research in dolphin cognition and communication in the wild is still a challenging task for marine biologists. Most problems arise from the uncontrolled nature of field studies and the challenges of building suitable underwater research equipment. We present a novel underwater wearable computer enabling researchers to engage in an audio-based interaction between humans and dolphins. The design requirements are based on a research protocol developed by a team of marine biologists associated with the Wild Dolphin Project. The EEG Visualising Pendant is intended for use in awkward or intense social situations to indicate when the wearer’s attention is waning. The pendant uses an EEG (Electroencephalography) headset that sends data to an LED (Light Emitting Diode) pendant to visualise the wearer’s EEG attention and meditation data to themselves and others.

The EEG Visualising Pendant is intended for use in awkward or intense social situations to indicate when the wearer’s attention is waning. The pendant uses an EEG (Electroencephalography) headset that sends data to an LED (Light Emitting Diode) pendant to visualise the wearer’s EEG attention and meditation data to themselves and others. Astronauts must wear a heavy, rigid, and cumbersome space suit during Extravehicular Activity (EVA) and during EVA training that takes place in the Neutral Buoyancy Lab (NBL). While the suit is a life-saving portable environment, it can also cause injuries to the wearer. This is problematic, especially during the long period of training that precedes a mission. In order to resolve this issue, designers need a better understanding of the relationship between the suit and the body in order to locate and resolve sources of injury or restriction. A wearable sensing garment was developed to explore two different approaches to detecting pressure on the body resulting directly from contact with the Hard Upper Torso (HUT) portion of the space suit. This garment uses two different types of sensors to collect data that can be used in identifying and resolving sources of injury and restriction in the space suit.

Astronauts must wear a heavy, rigid, and cumbersome space suit during Extravehicular Activity (EVA) and during EVA training that takes place in the Neutral Buoyancy Lab (NBL). While the suit is a life-saving portable environment, it can also cause injuries to the wearer. This is problematic, especially during the long period of training that precedes a mission. In order to resolve this issue, designers need a better understanding of the relationship between the suit and the body in order to locate and resolve sources of injury or restriction. A wearable sensing garment was developed to explore two different approaches to detecting pressure on the body resulting directly from contact with the Hard Upper Torso (HUT) portion of the space suit. This garment uses two different types of sensors to collect data that can be used in identifying and resolving sources of injury and restriction in the space suit. This paper describes the creation of a proof-of-concept design for an interactive glove that augments the mirror therapy therapeutic protocol in the treatment of a paretic limb following a stroke. The glove has been designed to allow the user to stimulate the fingertips of their affected hand by tapping the fingers of their unaffected hand, using Force Sensing Resistors to trigger Linear Resonance Actuators on the corresponding fingers. This paper outlines the design considerations and methods used to create the glove, and discusses the potential for further work in the pursuit of a clinical trial.

This paper describes the creation of a proof-of-concept design for an interactive glove that augments the mirror therapy therapeutic protocol in the treatment of a paretic limb following a stroke. The glove has been designed to allow the user to stimulate the fingertips of their affected hand by tapping the fingers of their unaffected hand, using Force Sensing Resistors to trigger Linear Resonance Actuators on the corresponding fingers. This paper outlines the design considerations and methods used to create the glove, and discusses the potential for further work in the pursuit of a clinical trial. This paper covers the development of a garment for body position monitoring and gesture recognition. We developed a comfortable, unobtrusive, textile based system that can be used to monitor the wearer’s arm position in real time. This involved testing for best sensor placement and the creation of a patch-based textile pattern for sensor stability. This Lilypad-based system delivers a low-profile, wireless garment that is not only fully functional in zero-gravity environments and is not constrained by the limitations of stationary motion input devices, but also outputs an intuitive visualization of sensor data in real time. Created for the Human Interface Branch of NASA, the concepts within this garment can be used to monitor which tasks might lead to repetitive stress injuries or fatigue, and to capture things like reaction time and reach envelope.

This paper covers the development of a garment for body position monitoring and gesture recognition. We developed a comfortable, unobtrusive, textile based system that can be used to monitor the wearer’s arm position in real time. This involved testing for best sensor placement and the creation of a patch-based textile pattern for sensor stability. This Lilypad-based system delivers a low-profile, wireless garment that is not only fully functional in zero-gravity environments and is not constrained by the limitations of stationary motion input devices, but also outputs an intuitive visualization of sensor data in real time. Created for the Human Interface Branch of NASA, the concepts within this garment can be used to monitor which tasks might lead to repetitive stress injuries or fatigue, and to capture things like reaction time and reach envelope. In this paper, we describe Photonic Bike Clothing IV: for cute cyclist. The concept of the design is the combination of formal and functional garments. The garments are made using the Lilypad Arduino kit, heating pads, and solar cells to enable the functionality of this clothing during city riding.

In this paper, we describe Photonic Bike Clothing IV: for cute cyclist. The concept of the design is the combination of formal and functional garments. The garments are made using the Lilypad Arduino kit, heating pads, and solar cells to enable the functionality of this clothing during city riding.

Strokes&Dots is a micro-collection using DIY and open design practices aimed at fostering creative innovation and advancement in the design aesthetics of wearable technologies and fashion-tech. This paper explores the current state of the art of wearable technologies; the 3lectromode platform; and the key goals in creating Strokes&Dots as an advancement platform for present and future fashion-tech design.

Strokes&Dots is a micro-collection using DIY and open design practices aimed at fostering creative innovation and advancement in the design aesthetics of wearable technologies and fashion-tech. This paper explores the current state of the art of wearable technologies; the 3lectromode platform; and the key goals in creating Strokes&Dots as an advancement platform for present and future fashion-tech design. This corset dress was designed to illustrate what a fictitious celebrity performer will wear in the future. It features an illuminated fiber optic fabric embellishment. The corset base is made out of organic cotton and hemp silk using traditional and modern professional corset-making techniques. The silhouette created is an extreme hourglass figure, accented by the illuminated strips draped around it. The corset dress is part of the “Cybelle Horizon” fashion collection by Rachael Reichert, based on a short story of the same name, written by Rachael Reichert.

This corset dress was designed to illustrate what a fictitious celebrity performer will wear in the future. It features an illuminated fiber optic fabric embellishment. The corset base is made out of organic cotton and hemp silk using traditional and modern professional corset-making techniques. The silhouette created is an extreme hourglass figure, accented by the illuminated strips draped around it. The corset dress is part of the “Cybelle Horizon” fashion collection by Rachael Reichert, based on a short story of the same name, written by Rachael Reichert. Fashion design with embedded electronics is a rapidly expanding field which is the intersection of fashion design, computer science, and electrical engineering. Each of these practices can require a deep level of knowledge and experience in order to produce practical garments that are suitable for everyday use. Lüme is an electronically infused clothing collection which integrates dynamic, user customizable elements driven wirelessly from a common mobile phone. The design and engineering of the collection is focused on the integration of electronics in such a way that they could be easily removed or embedded when desired, thus creating pieces that are easy to wash and care for. Subsequent iterations of the collection will focus on low power electronics, alternative power sources, local and global positioning, potential applications, and collaborative computing.

Fashion design with embedded electronics is a rapidly expanding field which is the intersection of fashion design, computer science, and electrical engineering. Each of these practices can require a deep level of knowledge and experience in order to produce practical garments that are suitable for everyday use. Lüme is an electronically infused clothing collection which integrates dynamic, user customizable elements driven wirelessly from a common mobile phone. The design and engineering of the collection is focused on the integration of electronics in such a way that they could be easily removed or embedded when desired, thus creating pieces that are easy to wash and care for. Subsequent iterations of the collection will focus on low power electronics, alternative power sources, local and global positioning, potential applications, and collaborative computing. This paper explores Fashion Acoustics and wearable computers as fashionable and practical musical instruments for live multi-modal performance. The focus lies on Fashion Acoustics in the form of an E-Shoe: a high heeled shoe guitar, made by art collective Chicks on Speed, designer Max Kibardin and technologist Alex posada. Introduced is the idea of building miniaturised-musical-computer-wearables, using industrialised techniques in shoe manufacturing, where the electronics are embodied in the design, promoting a more creative application of technology on the body for live intermedia performances. The approach is illustrated by describing design aims, methods and usages in theatrical settings and detailing practice driven research experiments in this cutting edge field of Fashion Acoustics.

This paper explores Fashion Acoustics and wearable computers as fashionable and practical musical instruments for live multi-modal performance. The focus lies on Fashion Acoustics in the form of an E-Shoe: a high heeled shoe guitar, made by art collective Chicks on Speed, designer Max Kibardin and technologist Alex posada. Introduced is the idea of building miniaturised-musical-computer-wearables, using industrialised techniques in shoe manufacturing, where the electronics are embodied in the design, promoting a more creative application of technology on the body for live intermedia performances. The approach is illustrated by describing design aims, methods and usages in theatrical settings and detailing practice driven research experiments in this cutting edge field of Fashion Acoustics.